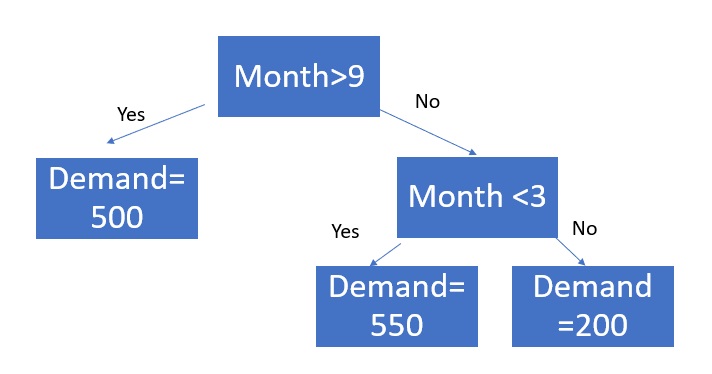

Forecast Pro’s machine learning methodology uses extreme gradient boosted decision trees. A decision tree applies a set of logical rules to a set of features to “decide” an appropriate forecast value. Below is an example of a decision tree.

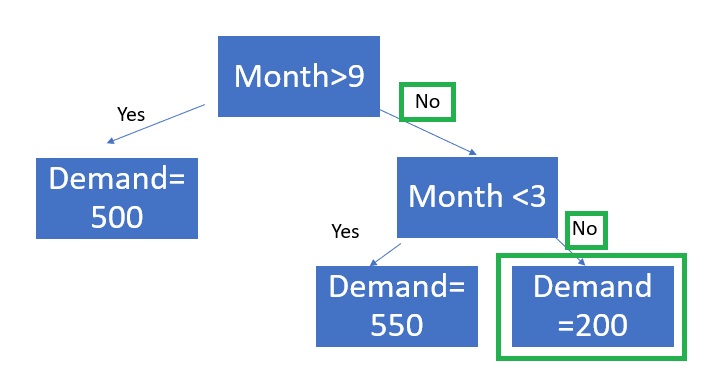

In this case, there is only one feature (month), and tree depth is two. The tree depth is the length of the longest path to a terminal node. To generate a forecast, the model starts at the top of the tree and uses the feature values to move through the tree until a terminal (“leaf”) node is reached. Here, the leaf nodes are the “Demand” nodes. For example, suppose it is June, or month = 6.

The demand forecast for June is 200.

Extreme gradient boosting is an ensemble approach. An ensemble model combines multiple models. Extreme gradient boosting generates multiple decision trees and then the forecast is equal to the average forecast across all trees. Boosting algorithms add models sequentially. In each iteration, the new tree improves on the weakness of the previous trees. Extreme gradient boosting finds the tree structure and parameters that optimize a specified objective function, typically Mean Square Error. Forecast Pro’s implementation uses XGBoost, an open-source library. Please consult XGBoost documentation for more details on how extreme gradient boosted trees work.

XGBoost requires several key inputs, including features, maximum tree depth and the number of trees the ensemble model should include. These inputs are important determinants of forecast accuracy and manually selecting them can be difficult. When using automatic univariate machine learning models, Forecast Pro uses its own rule-based algorithm to decide which features to automatically build and then automatically determines the maximum tree depth and number of trees using a combination or rules and out-of-sample holdout testing.

In addition to the Forecast Pro generated features used in the automatic univariate model, custom machine learning models may also include event schedules and explanatory variables as features in a boosted decision tree ensemble model. You can automatically train custom machine learning models using the same AI-driven logic that powers Forecast Pro’s automatic machine learning univariate models, or you can specify a specific boosted decision tree structure.