Overview

Automatic Univariate Machine Learning

Machine learning is available as an automatic univariate model, which may be included in expert selection, or as a custom model. Like exponential smoothing and Box-Jenkins, machine learning models leverage information inherent in the historic data (seasonality, trend) to create variables (features in machine learning). While the automatic machine learning models rely on only this information, custom machine models allow you to include additional explanatory variables and/or events.

Click the Machine Learning icon to use an automatic univariate machine learning model.

- \ML is the modifier associated with Automatic Machine Learning.

Select Expert with ML from the Machine Learning icon drop-down to include machine learning in expert selection for the selected item. This is useful when you want to consider machine learning in expert selection for only a subset of items. This is only available when you exclude machine learning from Expert Selection globally via the Performance tab of the Options.

- \MLES is the modifier to include machine learning in expert selection.

Custom Machine Learning Models

To specify a custom machine learning model, Select Manage from the Machine Learning icon drop-down to open the Machine Learning dialog.

Name: Forecast Pro names each of your custom machine learning model specifications and saves them in the project’s database. These named specification sets provide a convenient way to apply the same machine learning model specifications to multiple items on the Navigator. The Name drop-down allows you to select previously defined specification sets, create new ones, save the current set using a different name and delete the current set.

Description: The description field allows you to enter a description for the current model selection.

All custom machine learning models will include the same features used in the automatic univariate models (e.g. seasonality/interventions). The custom dialog allows you to include additional variables.

Events: The Events display lists all available event schedules. Check the event schedules you want machine learning to consider as features in the model. Because the event codes have no natural order (that is event codes 1 and 2 are simply two different events), Forecast Pro creates a separate feature for each event code in an event schedule. The machine learning algorithm will determine which event schedules and event codes should be used in the model.

Explanatory variables: The Explanatory variables field lists all global and item-specific explanatory variables available in the project. Check the variables that you want Forecast Pro to consider as features in your machine learning model. Do not include variables that are categorical, or unordered. If you have an unordered or categorical variable, include it as an event schedule, not an explanatory variable. Note that the machine learning algorithm will consider all checked variables, but it will only use a variable if it improves the machine learning forecasts.

Automatically Extend: Check the Automatically Extend checkbox if you want Forecast Pro to use expert selection to generate forecasts for any explanatory variable that does not have values provided for all periods in the forecast horizon.

Parameters:

Automatic: Check the Automatic checkbox if you want Forecast Pro to automatically select the Maximum Tree Depth and Number of Trees. The parameters are described below, in the Machine Learning Methodology.

If you uncheck the Automatic checkbox, the Maximum Tree Depth and Number of Trees spinners are operational. Consult the Machine Learning Methodology section below for more details on the parameters described below.

Maximum Tree Depth: The tree depth is the length of the longest path to a leaf or forecast node. Use the spinner to select a maximum tree depth. Please note that as you increase the tree depth, the in-sample model fit will improve, but the forecasts may be less accurate. You should consider using out-of-sample statistics to choose an appropriate maximum tree depth.

Number of Trees: Use this spinner to choose the number of trees to include in the ensemble model. As you increase the number of trees, the in-sample model fit will improve, but the forecasts may be less accurate. You should consider using out of sample statistics to choose an appropriate number of trees.

- \ML=name is the modifier to use a custom machine learning model with the specifications defined in name.

Machine Learning Methodology

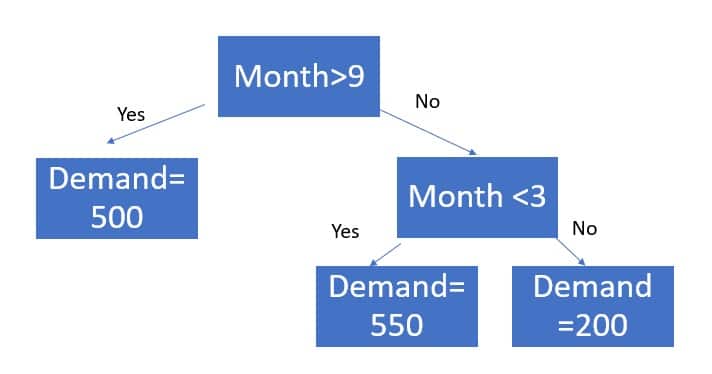

Forecast Pro’s machine learning methodology uses extreme gradient boosted decision trees. A decision tree applies a set of logical rules to a set of features to “decide” an appropriate forecast value. Below is an example of a decision tree.

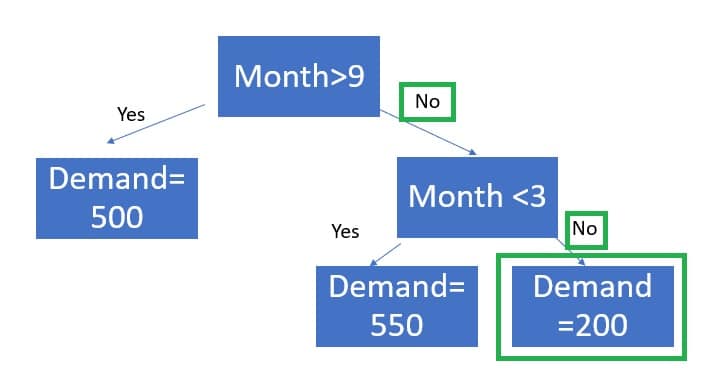

In this case, there is only one feature (month), and tree depth is two. The tree depth is the length of the longest path to a terminal node. To generate a forecast, the model starts at the top of the tree and uses the feature values to move through the tree until a terminal (“leaf”) node is reached. Here, the leaf nodes are the “Demand” nodes. For example, suppose it is June, or month = 6.

The demand forecast for June is 200.

Extreme gradient boosting is an ensemble approach. An ensemble model combines multiple models. Extreme gradient boosting generates multiple decision trees and then the forecast is equal to the average forecast across all trees. Boosting algorithms add models sequentially. In each iteration, the new tree improves on the weakness of the previous trees. Extreme gradient boosting finds the tree structure and parameters that optimize a specified objective function, typically Mean Square Error. Forecast Pro’s implementation uses XGBoost, an open-source library. Please consult XGBoost documentation for more details on how extreme gradient boosted trees work.

XGBoost requires several key inputs, including features, maximum tree depth and the number of trees the ensemble model should include. These inputs are important determinants of forecast accuracy and manually selecting them can be difficult. When using automatic univariate machine learning models, Forecast Pro uses its own rule-based algorithm to decide which features to automatically build and then automatically determines the maximum tree depth and number of trees using a combination or rules and out-of-sample holdout testing.

In addition to the Forecast Pro generated features used in the automatic univariate model, custom machine learning models may also include event schedules and explanatory variables as features in a boosted decision tree ensemble model. You can automatically train custom machine learning models using the same AI-driven logic that powers Forecast Pro’s automatic machine learning univariate models, or you can specify a specific boosted decision tree structure.

Machine Learning Forecast Report

Because ensemble models involve multiple models, it is difficult to summarize the impact of an individual feature on demand in the same way that you can with coefficients for dynamic regression or indexes for exponential smoothing. Instead of showing the size or direction of the impact of a feature on demand, Forecast Pro shows the relative importance of a feature in the ensemble model.

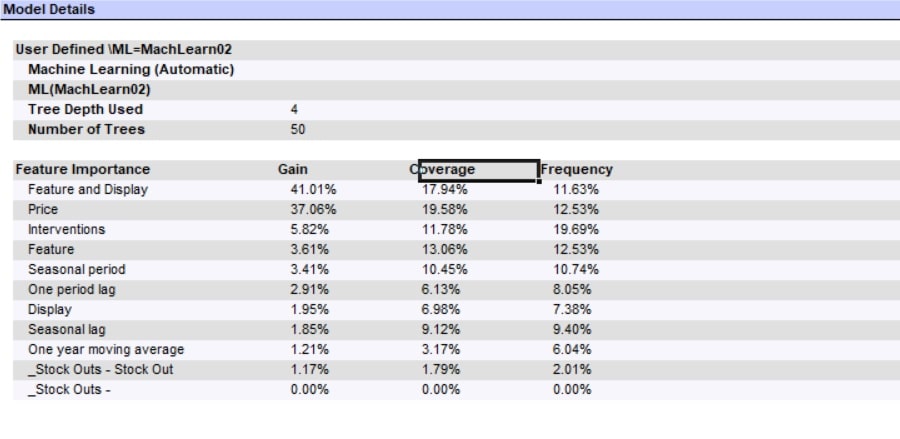

The above Model Details section of the Forecast Report is for a custom model with automatic parameter selection. Forecast Pro has used a maximum tree depth of 4 and 50 trees. The model includes 4 explanatory variables (Price, Feature and Display, Feature, Display) and one Event Schedule (Stock_outs) with 2 event codes (Stock Out and No Event). The remaining variables were automatically generated by Forecast Pro.

There are three importance measures displayed for each feature: Gain, Coverage and Frequency. Gain measures the percentage of overall model improvement or accuracy that is attributable to a feature. Coverage shows the percentage of observations that are forecasted at a leaf node that are decided by a feature. Frequency is the percentage of tree splits associated with a feature. Gain is widely considered to be the most relevant feature importance measure for forecasting. For more details on feature importance computations, please consult the XGBoost documentation.

See Also